Forget the terminological

what ever Works WORKS

for me it finding a pattern in the past that has 35 or more trade trigger with a max(ref point of today high + next day high) that will have a 70% chance of making 3% in 10 bars and 94% chance of makeing it in 60 bars. this is buy ing at next bar open.

On 2/3/2016 12:48 PM, rosenberggregg@yahoo.com [amibroker] wrote:

what ever Works WORKS

for me it finding a pattern in the past that has 35 or more trade trigger with a max(ref point of today high + next day high) that will have a 70% chance of making 3% in 10 bars and 94% chance of makeing it in 60 bars. this is buy ing at next bar open.

On 2/3/2016 12:48 PM, rosenberggregg@yahoo.com [amibroker] wrote:

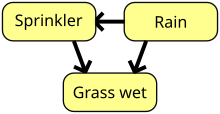

I would just chime in to second Sean's cautionary remarks. There are other types of learning networks which are less black box and so better regarding his concerns. For my work, I use Bayesian networks. There's an intro to them on Wikipedia, if you or your employee want to read up (link below). These however are very computationally intense and require being very selective about your network topology.

BTW, regarding Howard's remarks, also if you get into it enough, you'll see there is some terminological ambiguity. Not everyone uses "neural network" to refer just to supervised learning. Many people (myself included) use the term more broadly to refer to any connectionist network in which the semantics of at least some nodes and all connections are left to be defined by the learning algorithm via its pattern recognition, and distinguish within it between supervised and unsupervised learning algorithms. A short primer of terms you might see,

1) Semantic network - A network of nodes and connections whose meanings are all determined by the programmers.2) Perceptrons - An input-output network in which the semantics of all nodes are determined by the programmer but the meaning of the connections is determined by training.3) Neural Network - A network in which the semantics of at least some of the nodes and all of the connections are determined by training.4) Annealing - The dominant class of unsupervised training algorithms.5) Propagation - The dominant class of supervised training algorithms.

Bayesian networks do not fit neatly into any of these classifications, as the meaning of the connections corresponds transparently to Bayesian conditional probabilities, while providing flexibility regarding whether or not all nodes need a prior defined semantics. To create a "neural" type Bayesian network where there are hidden nodes whose meaning is determined during learning, you can maximize on a measure like mutual information between nodes under constraint of an objective function. During learning, the network will make "hypotheses" about connections to the hidden nodes and eventually settle on values.

Net net, I'd just emphasize not to be upset with your employee for not getting great results right off the bat. This is a huge field advancing very quickly and highly technical. If you decide to pursue a solution in it, expect to have to invest time in trial and error and human learning.

__._,_.___

Posted by: Nick <nickhere@yahoo.com>

| Reply via web post | • | Reply to sender | • | Reply to group | • | Start a New Topic | • | Messages in this topic (8) |

**** IMPORTANT PLEASE READ ****

This group is for the discussion between users only.

This is *NOT* technical support channel.

TO GET TECHNICAL SUPPORT send an e-mail directly to

SUPPORT {at} amibroker.com

TO SUBMIT SUGGESTIONS please use FEEDBACK CENTER at

http://www.amibroker.com/feedback/

(submissions sent via other channels won't be considered)

For NEW RELEASE ANNOUNCEMENTS and other news always check DEVLOG:

http://www.amibroker.com/devlog/

This group is for the discussion between users only.

This is *NOT* technical support channel.

TO GET TECHNICAL SUPPORT send an e-mail directly to

SUPPORT {at} amibroker.com

TO SUBMIT SUGGESTIONS please use FEEDBACK CENTER at

http://www.amibroker.com/feedback/

(submissions sent via other channels won't be considered)

For NEW RELEASE ANNOUNCEMENTS and other news always check DEVLOG:

http://www.amibroker.com/devlog/

.

__,_._,___

EmoticonEmoticon